Custom Lighting 2.0: Baking Time

After spending a month building a custom 2D lighting engine, I was ready to move on to other features. Then I tried to actually use it and yeah it didn't work.

After spending a month building a custom 2D lighting engine, I was ready to move on to other features. Then I tried to actually use it and yeah it didn't work. So I wasted a couple more months on lighting features that unity gives you for free, trying to fix it.

The Context

Let's go back to that first blog post. Just after I wrote it, I decided that now that I'd spent a month making this thing I might as well use it. So I took the thing that I thought could use ambient lighting the most, the truck. The truck its where you spawn into a level and how you leave a level, so it will on screen for a sizeable amount of your play time. I wanted it to feel like a truck, so I started adding headlights, tail lights, even ambient side lighting when it was unlocked. This totaled to about six more lights in the scene - that's nothing, right? Wrong. My game went from running 60 fps in editor to a feeble 30 when adding just those six new lights. As we all know from the 2024 released Paper Mario TTYD Remake, the difference between 30fps and 60fps is a big deal to consumers. And I believe rightly so, which meant in that moment I realized I can't publish this game with this poor of a performance. I have to find a better solution. This was actually just around the time I had a vacation and some time to ponder. So I tried to research how easy it would be to fix my engine if it even looked a little easy I thought, that'd be preferable to ripping out all that work I had just done. From youtube tutorials to documentation to other people's blogs, I thought I found the easy answer I was looking for, I just needed to do my raycasting on the GPU!

2D GPU Raycasting

Simply put my last raycasting system was using this script called MMVisionCone2D from a unity package TopDownEngine (which is pretty, good I recommend it). This did all my raycasting for me, created these meshes of the shape a light would take, then I turned those into textures which then was read into the game via some shaders I wrote. This works, but obviously was non-performant at scale. I did some unity profiler analysis and determined that the issue was my usage of the MMConeVision2D, it simply wasn't coded with there being any more than a couple instances of that script in a scene at a time in mind.

So that's why when I read about raycasting on the GPU it jumped out at me, and I began to think about how easy/hard it would be to implement. I thought that when I compute each light source into their own texture that I could do the raycasting right in that compute shader with five to ten lines of code, but only if I just had a way to detect physics collisions there too. The assumption that it would be easy to write the raycast code was totally correct and it was very similar to raymarching which I knew about from messing with shadertoy, which helped. But the real complexity was not the raycasting, but it was getting that physics info into the compute shader. I had convinced myself during my vacation that I could apply some shader to each object that would visually represent their collider then I could use a unity camera to render all those colliders into a render texture. I could then use that render texture as a physics texture and plug it into that 'create light texture' compute shader. That was wrong, and pretty wrong at that.

The Physics Texture

Yeah no, the physics texture would not be that simple. The only other idea I could come up with was to use a giant trigger around the screen in the game that would pick up when gameobjects entered and left the screen. That trigger would then run some code that every frame would grab the gameobject's collider then turn it into a mesh and add it to one texture of all the colliders, the physics texture. The great thing about this trigger solution and render solution was it resembled the way I was detecting light sources, so I was able to shift over and reuse that code. But even then there were bumps in the road, mainly that I overlooked that in unity there is a direct property/variable that just gives you the mesh of a collider. Instead I was iterating through points and making meshes from scratch for at least a week before I figured that out funnily enough. But even after I had, I was still creating a new mesh for everything every single frame. For something that was supposed give me better performance, I knew this was not acceptable. So I went deeper down the rabbit hole, I thought it'd be so much faster if I just pre-computed those collider's mesh as a texture. What if I just baked those ahead of time?

The first version of the physics texture overlaid in green over the stage

Beginning to Bake

I had heard of baking before this, but never actually knew what it meant. It is essentially is the pre-computation of data so that it is already prepared for usage later, maybe its an analogy to like baking the meal before the guests show up. So when I say that I baked the texture, I mean that I made the texture once, I pre-computed then hope to serve it like 60 times a second, so maybe that's where the analogy with food falls apart. The actual technical part of this was really just when the trigger found a new collider, compute the mesh texture and cache it in a hash map with the key being the collider and the value containing the texture. Pretty simple, but this solution really did work, after I implemented this the game had GPU raycasted lighting, baked textures and ran at around 150 frames per second (fps) on the first stage of my game. I was riding pretty high, thinking thoughts like "this wasn't that bad," but then I played through the game and realized that the third stage of my gaming was running at a measly 3fps, twenty times slower than the bare minimum benchmark. After all that work to see that number was soul crushing. What was I missing, how could this be running so poorly? How come it runs so well when the stage is small? Probably because there's not much on the stage, right?

Double Baking

So I opened the unity profiler and deleted all the trees in the third scene. It went from 3 fps to around 150 just like the performance in the first stage. So I thought, we can't reduce the count of trees obviously, so why don't we reduce the amount of textures? Since each tree has a baked texture, what if we just combined all the textures into one giant texture? I liked the idea, but this time I caught the hidden complexity. I had plans to cut or break trees in the future as a mechanic, so I'd need to modify this big texture during the game and so it'd have to be performant. Making the giant texture was actually relatively easy as the logic was almost all predefined. It just needed some rewiring. The texture had to be created right after map generation and I had to add a way to filter which colliders should be baked into the big texture and which shouldn't. I took those colliders that were on the stage, then ran one giant bake to flatten them all in one texture then stored that as a 'collider' that was always on screen for the trigger. That made my fps skyrocket reaching highs of 300 and lows of 200 fps in editor. I was pretty happy, but I remembered I still had one last issue to solve: the removal of trees.

All the baked colliders are in white and the dynamic created textures are in green

Removing Colliders from the Big Texture

The scenario is that we have an object and we want to remove its texture from the big texture. Recomputing the whole thing would be too costly, especially since we're doing it while the player is playing not in a loading screen. So we need to surgically remove just the area that was occupied by the collider texture. To do that, you need to cache the bounds of the collider texture in the big texture. So that with the collider itself you can look up the region that needs to be re-rendered. But then what if two objects overlap each other, won't their textures and colliders overlap? Yes it does, which means we need to be able to find all the objects that are even slightly in the bounds of the texture in question and re-render them as well. But how do we get all the colliders in a certain bounds? I needed a way to quickly find 'what objects are near this spot?' A quad tree is perfect for this - it's basically a spatial index that divides your world into smaller and smaller rectangles, making lookups super fast. I grabbed some code online to set one up, and initialized it when I cached the collider locations in the big texture. So when I wanted to remove a tree (the leafy kind), I'd use this quad tree to return all objects located into vicinity of the collider texture so that I can re-render them in the big texture. I also created two new compute shaders, one to clear the region and another to re-render the region given all the objects to re-render. That was a lot, huh. Yeah just to remove a tree, and just to implement something that unity already had!

The third stage and a work-in-progress enemy that destroys trees

Conclusion

Even though I originally said that I wasted my time, when everything is said and done, what actually matters more: getting something out as quick as possible or learning skills? I know for a fact that if I used unity's built-in 2d lighting system, I'd have three more months of other features in this game already, but at the same time I'd know nothing about compute shaders. Learning about compute shaders let me realize how I could implement an efficient way to store like liquid splats or other decals or graphics on a texture so that if a character bled somewhere on the map it could stay there forever and be performant. Another idea that came to mind was how you could write a characters and enemies velocities to a texture for a grass shader to read in, so the grass moves when you walk by it. Both of these effects are definitely not things that don't come out of the box in unity and they require knowledge of shaders to implement. So, did I fall victim to the classical sunk cost fallacy or scope creep, totally yes. Do I regret it? No, I learned a lot tumbled down the rabbit hole but I'm really going to try to not do it again so I can finish something finally.

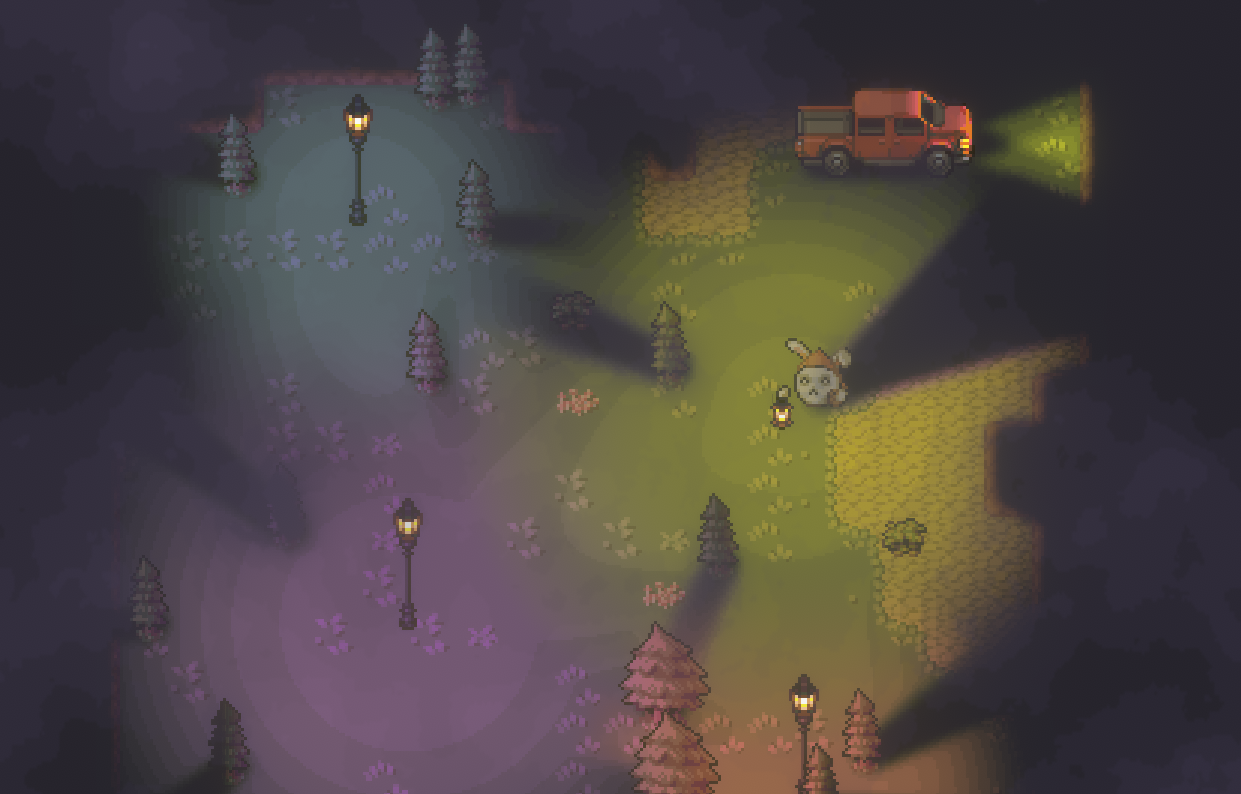

The final look at what I have now

The Future

In terms of features to add to the lighting engine in general, I'd love to simulate some sort of height in the shadows. Meaning shadows for low lying bushes would be short and trees shadows would be long. This seems not impossible, but complicated. Also I do really still want to add normal maps extremely badly, but not even looking at that until I update the random generator, since that is by far more important. So hopefully next blog post I write about the game is one about how I converted the stack based generation of my current generator to be node based. A change that should allow for regional generation, like biomes and structures. I don't really know how I didn't add that in the first place to be honest. But thanks for reading and also follow me on BlueSky!